Research @ UPB-CN

Our research is focused on networked systems and we strive to make them more efficient and sustainable for supporting modern machine learning applications. We have won serveral prestigious awards for our research and our work has been generously supported by various funding sources including the German Research Foundation (DFG), the Dutch Research Council (NWO), Google Research, Intel, and AMD.

Efficient Deep Learning Inference Serving

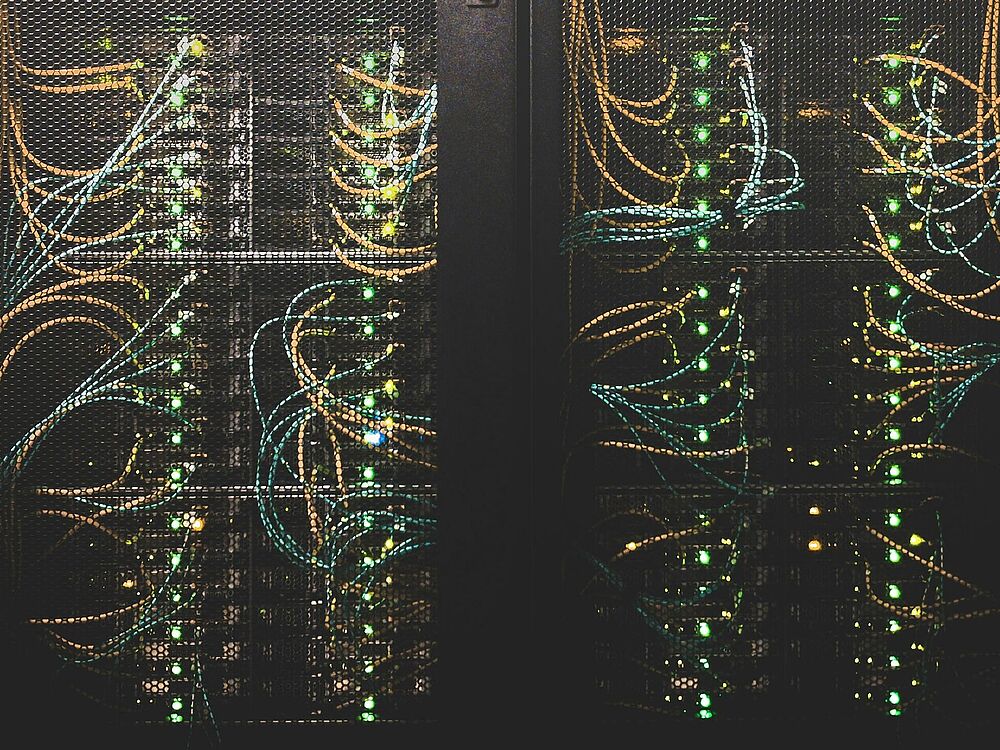

With the proliferation of deep learning (DL), inference serving systems have become critical for the deployment of DL-powered applications. An inference serving system, typically deployed in a large-scale data center, takes existing pre-trained DL models and creates multiple instances of it to serve incoming inference requests at a high rate. The main challenge consists in minimizing the total resource consumption for serving a given number of inference requests while respecting the latency service-level objectives (SLOs) of the requests. Sometimes, the processing of an inference request can involve multiple pre-trained DL models chained or orchestrated in a dataflow graph, further complicating the problem. To address such a challenge, we are exploring how to leverage modern cloud computing paradigm namely serverless computing to enable more flexible resource allocation and investigate auto-scaling mechanisms to enable extreme elasticity for DL inference serving.

Related Publications

- [IPDPS'25] It Takes Two to Tango: Serverless Workflow Serving via Bilaterally Engaged Resource Adaptation

- [SoCC'24] InferCool: Enhancing AI Inference Cooling through Transparent, Non-Intrusive Task Reassignment

- [WWW'24] LambdaGrapher: A Resource-Efficient Serverless System for GNN Serving through Graph Sharing

- [EuroMLSys'24] Sponge: Inference Serving with Dynamic SLOs Using In-Place Vertical Scaling

- [JSys'24] IPA: Inference Pipeline Adaptation to Achieve High Accuracy and Cost-Efficiency

- [RTAS'22] FA2: Fast, Accurate Autoscaling for Serving Deep Learning Inference with SLA Guarantees

Time-Sensitive Mobile/Edge AI Systems

AI technologies have increasingly penetrated applications running on mobile/edge devices, e.g., mobile phones and smart cameras. Yet, deploying state-of-the-art AI models with billions of parameters on resource-constrained mobile-/edge-devices still faces critical challenges due to the stringent compute and memory requirements of running these models. We are investigating solutions to address this timely problem, mainly in two directions: (1) offloading intensive AI inference workloads to an edge or cloud platform where more computational resources are available, while handling the inherent network variability, (2) optimizing the computations of big AI models to fit them to the resources of mobile/edge devices by leveraging sparsity and locality.

Related Publications

- [MobiCom'25] Uirapuru: Timely Video Analytics for High-Resolution Steerable Cameras on Edge Devices

- [INFOCOM'24] X-Stream: A Flexible, Adaptive Video Transformer for Privacy-Preserving Video Stream Analytics

- [TPDS'24] Graft: Efficient Inference Serving for Hybrid Deep Learning With SLO Guarantees via DNN Re-Alignment

- [RTSS'22] Jellyfish: Timely Inference Serving for Dynamic Edge Networks (Outstanding Paper Award)

- [TPDS'22] HiTDL: High-Throughput Deep Learning Inference at the Hybrid Mobile Edge

- [EuroMLSys'22] Live Video Analytics as a Service

- [SEC'20] Clownfish: Edge and Cloud Symbiosis for Video Stream Analytics

Data Center In-Network Computing

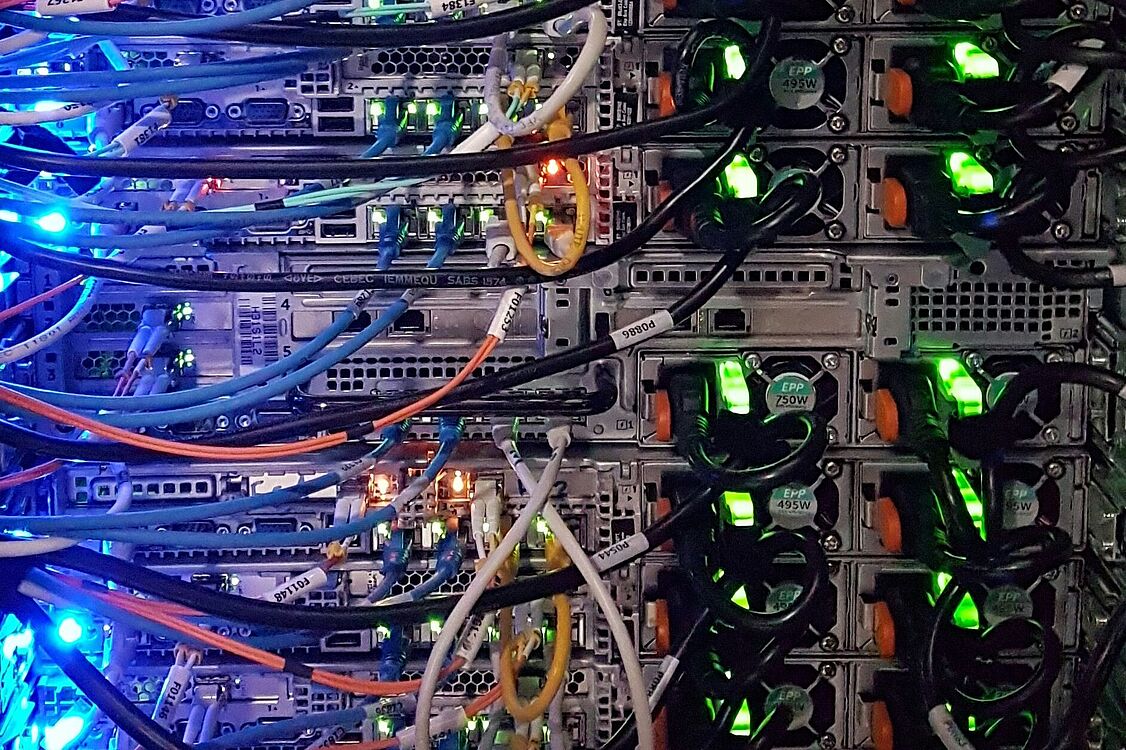

With the slowdown of Moore’s law, large-scale data centers have been employing an increasing number of domain-specific accelerators (GPUs, TPUs, and FPGAs) to deliver the needed unprecedented performance for computation-intensive workloads like machine learning model training. Under this trend, the emergence of programmable network devices (P4 switches, SmartNICs, and DPUs more recently) has motivated a new concept called in-network computing, where programmable network devices (with P4/NPL languages) are instructed to accelerate application-specific computations (e.g., AllReduce for distributed machine learning training) in addition to running network functions. In-network computing brings tremendous performance benefits for a variety of distributed workloads, but also imposes challenges to the design of data center systems. We target the usability of data center in-network computing and propose programming models and management systems.

Related Publications

- [SC'24] NetCL: A Unified Programming Framework for In-Network Computing

- [ISCC'24] NetNN: Neural Intrusion Detection System in Programmable Networks (Second Best Paper Award)

- [INFOCOM'24] Train Once Apply Anywhere: Effective Scheduling for Network Function Chains Running on FUMES

- [ASPLOS'21] Switches for HIRE: Resource Scheduling for Data Center In-Network Computing

- [HotNets'21] Don't You Worry 'Bout a Packet: Unified Programming for In-Network Computing

- [INFOCOM'20] Letting off STEAM: Distributed Runtime Traffic Scheduling for Service Function Chaining

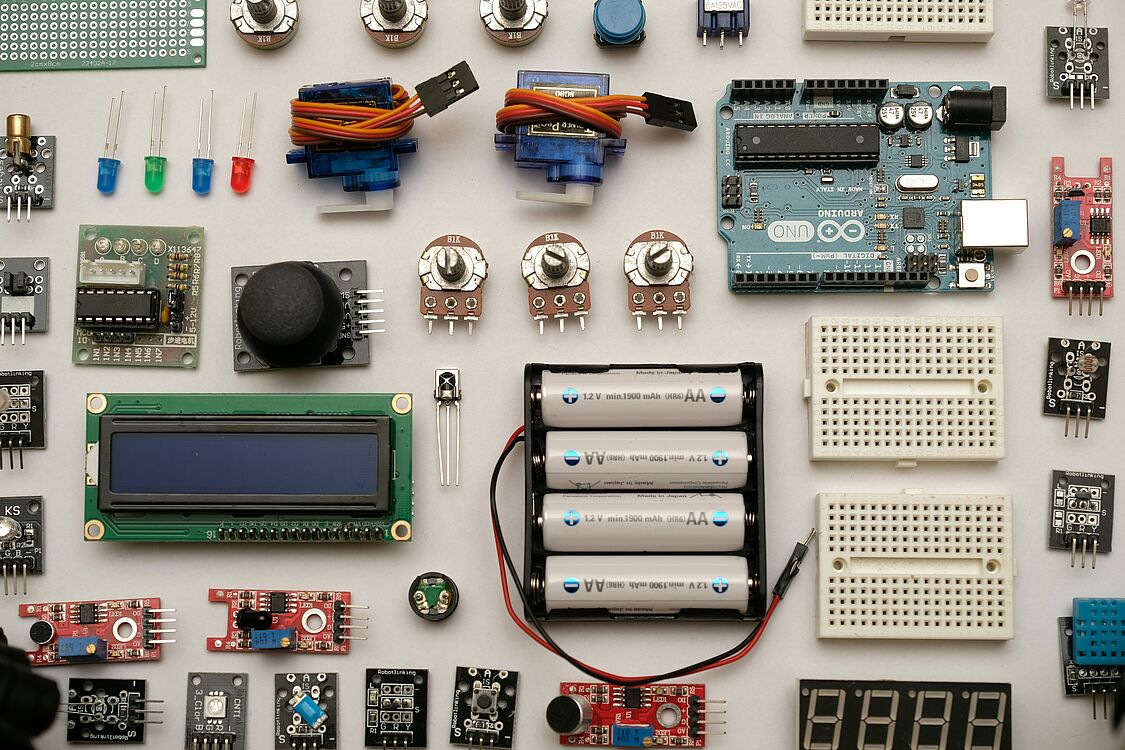

Battery-Free Internet-of-Things

Internet-of-Things (IoT) has been widely deployed for different tasks in various domains such as environment monitoring for smart agriculture and infrastructure abnormal detection in smart cities. Yet, virtually all current IoT systems suffer from a critical issue, namely low sustainability caused by the onboard batteries on all IoT devices. Batteries will eventually die and the costs associated with replacing all these batteries on thousands of IoT devices possibly deployed in hostile environments are extremely high. If not recycled properly, those batteries will also bring environmental pollution since they contain hazardous chemicals. In light of this challenge, researchers have proposed to build IoT systems without batteries by harvesting energy from the environment---a paradigm called battery-free IoT. Battery-free devices suffer from unpredictable intermittency and complicates significantly the IoT system design. We explore how battery-free IoT devices can establish connections to each other and perform sophisticated communication tasks, which is a fundamental but overlooked problem so far.

Related Publications

- [APNet'24] A Little Certainty is All We Need: Discovery and Synchronization Acceleration in Battery-Free IoT

- [TMC'24] Data on the Go: Seamless Data Routing for Intermittently-Powered Battery-Free Sensing

- [IPCCC'23] Routing for Intermittently-Powered Sensing Systems (Best Paper Award)